.NET Core Performance Optimization Techniques

August 2, 2024

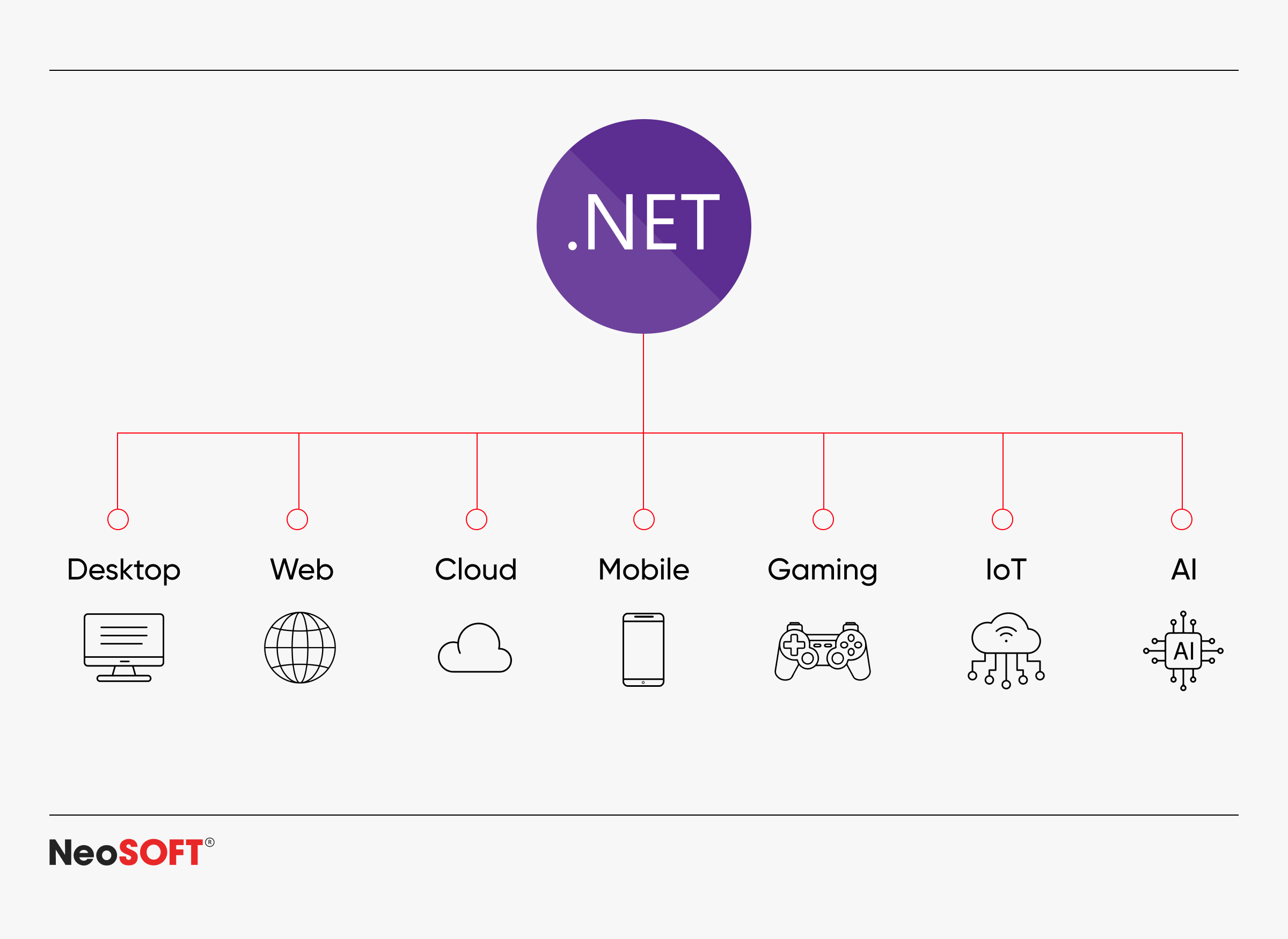

Performance optimization is crucial for contemporary .NET Core applications, aiming to enhance user experience, minimize response times, and efficiently manage system resources. This article delves into ten advanced techniques tailored to elevate your application’s performance. By leveraging key performance metrics and continuous monitoring, developers can identify critical aspects impacting system performance. Techniques include optimizing server configurations, fine-tuning CPU utilization, and improving database operations to boost overall efficiency. Implementing lazy loading and background jobs helps manage load conditions and resource utilization effectively. With a focus on industry standards and direct optimizations in application code, developers can significantly reduce response times and achieve severe improvements in system performance. Practical examples illustrate how these methods optimize performance, supported by metrics that highlight improvements in latency, memory usage, and overall system efficiency.

Profiling and Monitoring

Discovering performance problems and improving .NET Core apps requires frequent profiling and monitoring. Using performance testing tools, developers can obtain essential performance metrics and system interpretation data to identify areas needing improvement and bottlenecks.

Key Techniques:

Performance Evaluation: It is essential to regularly conduct performance testing under-different load scenarios to evaluate your application’s efficiency and real-world functionality. By simulating high traffic and diverse user interactions, tools like Apache JMeter (for backend/API performance) and browser-based performance profilers (like those built into Chrome DevTools or the Lighthouse extension) enable you to identify performance bottlenecks early in the development cycle. A proactive approach allows you to pinpoint and optimize CPU utilization, memory consumption, network latency, and other critical performance metrics, leading to a more responsive and scalable application.

Continuous Monitoring: For real-time tracking of system performance, it is vital to utilize continuous monitoring tools like Application Insights or Prometheus. These tools enable proactive tactics for performance optimization with crucial insights into memory allocation, CPU utilization, and overall system efficiency. Organizations can optimize their apps for optimal performance and responsiveness under various workload conditions by frequently tracking these indicators. Profiling tools such as DotTrace and ANTS Performance Profiler provide extensive performance measurements and can flag the underlying cause of performance degradation. They support targeted optimization efforts that allow developers to identify specific components of the code of application that directly link to performance problems.

Asynchronous Programming and Parallel Processing for Optimal Performance

Harnessing the power of asynchronous programming and parallel processing can drastically improve the responsiveness and efficiency of your .NET Core applications.

Key Techniques:

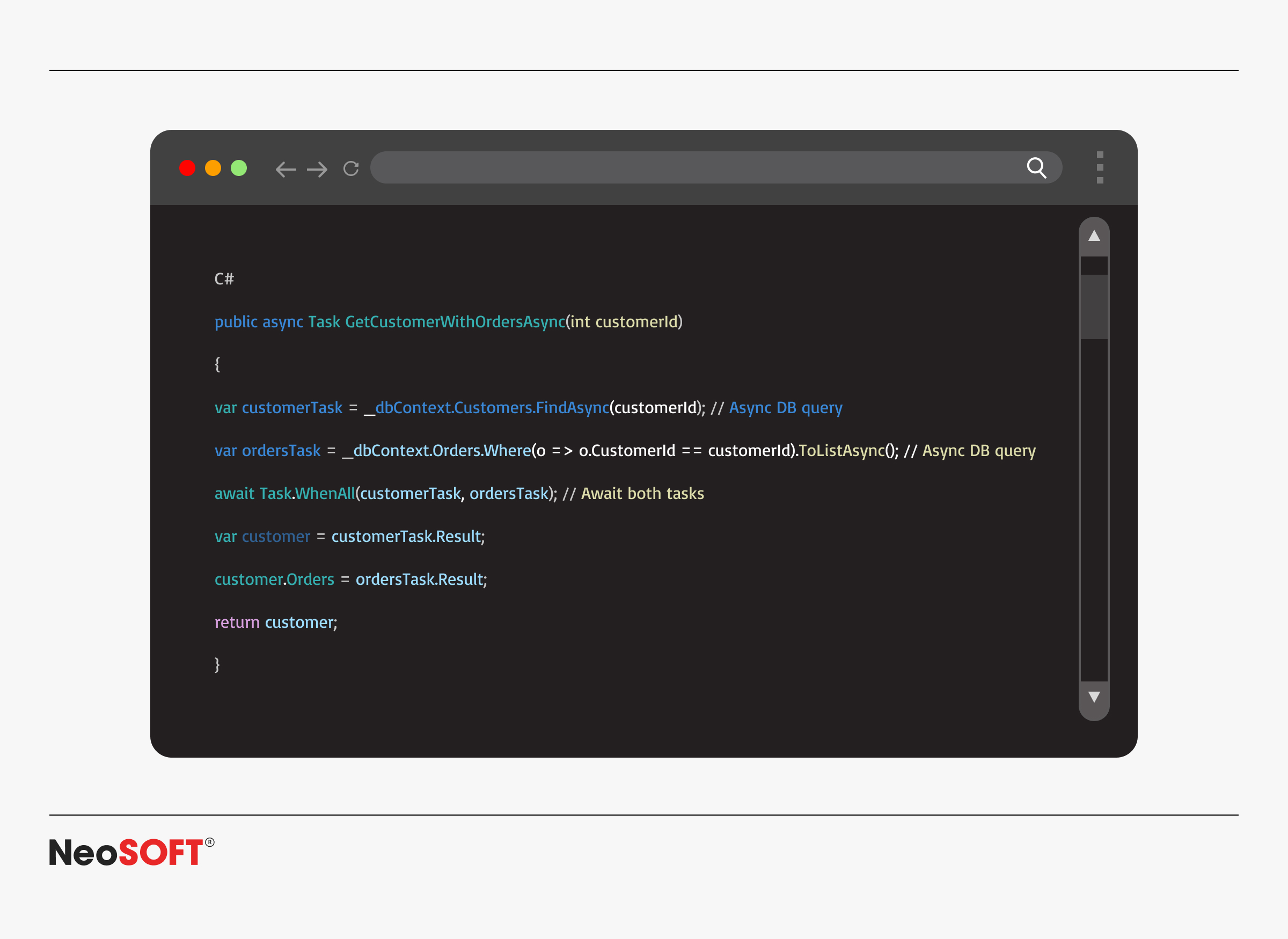

Async/Await (I/O-Bound Operations): Use async and await for tasks like network requests or database queries where your application is waiting on external systems. With this technique, the I/O activities are finished in the background, freeing up your programme to execute other tasks.

Task Parallel Library (TPL) (CPU-Bound Operations): To fully use your hardware, divide jobs that need a lot of calculations or processing among several threads by using Thread-Parallel Learning (TPL). TPL simplifies parallel processing and enables you to write more efficient, concurrent code.

Example:

Optimizing Database Operations

Database interactions frequently have a big impact on how well an application runs. Enhancing the way your application communicates with the database can lead to major improvements in scalability and general responsiveness.

Key Techniques:

Indexing: Create indexes on frequently queried columns to speed up data retrieval.

Efficient Queries: Write optimized SQL queries to minimize the load on your database. Avoid excessive joins, unnecessary subqueries, and inefficient patterns.

Caching: To minimise database round trips and enhance response times, caching frequently reads data in memory (using MemoryCache) or distributes caching solutions like Redis or NCache.

Batching: To cut down on the expense of making repeated queries, think about batching the identical activities your application does.

Connection Pooling: Save time and money by leveraging pre-existing database connections instead than establishing new ones for every query.

Caching Strategies

Key Techniques:

In-Memory Caching: Utilizing tools such as Memory Cache is essential for performance optimization in applications requiring efficient database management handling. This technique reduces response time by storing frequently accessed data locally, minimizing CPU utilization and enhancing system efficiency. By facilitating lazy loading and initialization, in-memory caching ensures optimal use of resources and supports fine-tuning of critical operations, ultimately improving application performance and overall system efficiency.

Example:

Consider a Dot Net Core application that frequently queries customer data. For faster load times and improved performance, index the customer ID field and implement lazy loading for related orders.

Distributed Caching: Deploying solutions like Redis or NCache is advantageous for larger applications needing robust performance optimization and efficient resource management. These solutions enable scalable data caching across multiple servers, improving load times and system reliability. Distributed caching enhances application scalability and provides consistent performance advantages under various load conditions by supporting load balancing and ensuring high availability. Integrating these techniques optimizes database performance and facilitates system optimization for enhanced user experience.

Load Balancing

Load balancing is a crucial component of speed optimization for applications handling large amounts of traffic. By dividing incoming requests equally among several servers, load balancing reduces response times and assures optimal resource use.

Key Techniques:

Server Configurations: Correctly setting load balancers like Nginx or AWS Elastic Load Balancing effectively distributes traffic, maximizing speed and improving reliability. Load balancers prevent bottlenecks by dividing incoming requests among multiple servers, speeding up response times and ensuring availability even under fluctuating loads. This approach is crucial for maintaining a responsive and reliable application environment.

Lateral Scaling: Adding servers to manage increased demand load (horizontal scaling) can improve performance. By distributing the workload across multiple servers, lateral scaling enables the application to handle higher traffic volumes efficiently without compromising performance. This scalability approach supports seamless growth and adaptability to fluctuating user demands, ensuring optimal system performance and responsiveness.

Efficient Memory Management

Efficient memory management is crucial for maintaining system performance. Proper memory handling can reduce bottlenecks, prevent memory leaks, and improve the stability of Dot Net Core applications.

Key Techniques:

Garbage Collection: Optimize garbage collection settings to ensure Dot Net Core applications manage memory efficiently. Fine-tune the Dot Net Core garbage collector (GC) to improve performance by adjusting generation sizes and collection frequency. This strategy reduces garbage collection-related interruptions, ensuring enhanced application responsiveness and effective resource utilization. By optimizing CPU utilization and memory management, developers maintain overall system performance and mitigate memory allocation-related issues.

Pooling: Implementing object pooling to reuse objects instead of creating new ones reduces memory allocation overhead in Dot Net Core applications. This technique is particularly beneficial for managing database management and optimizing performance by minimizing the resource usage associated with object creation and destruction. Object pooling supports fine-tuning of critical processes and enhances system efficiency by facilitating sloppy start up and lazy loading of objects. By optimizing code of application through object reuse, developers can achieve improved performance and ensure efficient use of available resources, thereby enhancing the scalability and stability of their applications.

Optimizing Application Code

Fine-tuning code of application is fundamental to performance optimization. Ensuring the code is efficient, follows best practices, and minimizes resource usage can lead to significant performance gains.

Key Techniques:

Code Review: Regular code reviews help identify inefficient code and potential performance bottlenecks within Dot Net Core applications. Code reviews and refactoring maximize efficiency and performance by pinpointing areas for optimization. Developers ensure smooth program operation and continuous satisfaction of performance metrics by maintaining clean and optimized code. This proactive approach to code optimization supports a high-performing and scalable application.

Optimized Algorithms: To maximize performance in Dot Net Core applications, implement optimized algorithms and leverage efficient data structures. Using algorithms with lower temporal complexity improves system responsiveness and reduces computational overhead. Employing efficient data structures like balanced trees and hash tables ensures efficient database operations and optimizes resource utilization. This systematic approach improves application speed and user experience.

Reducing Latency with Content Delivery Networks (CDNs)

Key Techniques:

CDN Integration: By shortening the distance that data must travel, integrating content delivery networks (CDNs) like Cloudflare or Akamai to offer static files greatly increases load speeds. By distributing information from servers nearest to users, information delivery networks (CDNs) store data in many geographic locations, reducing latency and improving overall system efficiency. This method optimizes resource utilization and increases scalability by offloading traffic from origin servers and speeding up content delivery.

Edge Servers: Using edge servers to cache content closer to end-users further reduces latency and enhances system performance. By strategically placing them in multiple locations to store and distribute cached content, edge servers ensure speedier access for users in different regions. Edge servers enhance responsiveness and dependability by reducing the number of network hops and distance data travels. This is especially beneficial for dynamic content and applications that need real-time data delivery. Integrating edge caching with CDN solutions enhances overall application performance and user experience.

Implementing Background Jobs

Delegating arduous tasks to background jobs might enhance your application’s responsiveness to complete asynchronous tasks and ensure the main application thread can respond to user queries.

Key Techniques:

Task Scheduling: You may handle background operations with task scheduling libraries like Hangfire, which allow you to plan tasks at particular periods or intervals. This method frees up work from the main application thread and ensures smoother operation by effectively handling non-time-sensitive activities. Developers may improve overall system responsiveness and resource efficiency while enhancing application workflow efficiency by automating task execution.

Asynchronous Processing: Implementing asynchronous processing is required to optimize efficiency and resource utilization for non-critical processes. Asynchronous processing ensures that operations like I/O-bound tasks don’t impede the programme’s responsiveness by severing the task execution from the main application thread. The application may process additional requests in parallel, increasing throughput and enhancing user experience. Asynchronous programming models, supported by frameworks like Dot Net Core’s async/await pattern, facilitate efficient multitasking and improve the scalability of applications handling diverse workloads.

Example:

A Dot Net Core application that sends email notifications can offload this task to a background job, ensuring user interactions remain fast and responsive.

Utilizing Lazy Initialization

A performance-optimization method called indolent start-up postpones object creation until it is essential. This approach helps reduce initial load times and optimize resource usage.

Key Techniques:

Lazy

Deferred Execution: Implementing deferred execution for expensive operations can prevent unnecessary resource consumption, optimizing overall system performance. By postponing the execution of operations until they are explicitly needed, developers can minimize CPU utilization and improve response times. This technique is particularly beneficial for handling database operations or other CPU-bound tasks, where delaying execution until the last possible moment can lead to significant performance benefits and efficient resource management.

Example:

Using Lazy

Additional Techniques for Enhanced Performance

Beyond the primary ten techniques, some additional methods and tools can further enhance performance in Dot Net Core applications. These techniques address specific aspects of system performance and can provide targeted improvements.

Reducing Network Latency

Especially for apps that depend on external APIs or services, network latency can have a major negative effect on performance. Latency may be decreased and response times can be increased by using strategies including reducing HTTP requests, compressing replies, and optimising API use.

Client-Side Optimization

A crucial element in the total speed of web applications is client-side performance optimisation. By using browser caching, optimising images, and minifying CSS and JavaScript files, one may significantly decrease load times and enhance user experience.

Using gRPC

In a microservices architecture, the high-performance RPC framework gRPC may greatly increase the speed of communication between services. Using gRPC, you can achieve lower latency and higher throughput than traditional RESTful APIs.

Implementing Circuit Breakers

Circuit breakers are a design pattern that helps improve distributed systems’ resilience and performance. By implementing circuit breakers, you can prevent cascading failures and ensure that your application can handle service outages gracefully.

Conclusion

Advanced .NET performance optimization techniques enhance the performance and responsiveness of your .NET Core applications. Investing in these optimization efforts ensures that your application meets and exceeds industry standards for performance and reliability, providing a superior overall user experience.

By focusing on these areas, your development team can ensure your application performs optimally under various load conditions, delivering the performance advantages end-users expect. The continuous performance optimization process, including profiling, asynchronous programming, and efficient resource management, is vital for the sustained success of Dot Net Core applications.

Reach out to us today at [email protected] to learn more about our .NET expertise and how we can accelerate your platform performance.