Mastering Prompt Engineering

With Large Language Models

Introduction

Prompt engineering is crafting prompts that guide large language models (LLMs) to generate desired outputs. LLMs are incredibly versatile but can be tricky to control without careful prompting. By understanding the capabilities and limitations of LLMs and by using proven prompt engineering techniques, we can create transformative applications in a wide range of domains.

Large language models (LLMs) are artificial intelligence algorithms that use deep learning techniques to understand and generate human language. They train using massive datasets of text and code, which gives them the ability to perform a wide range of tasks, including:

- Text generation,

- Language Translation,

- Creative writing,

- Code generation,

- Informative question answering.

LLMs are still under development, but they are already being used to power a variety of applications, such as,

- Coding assistants,

- Chatbots and virtual assistants,

- Machine translation systems,

- Text summarizers.

What are some widely used Large Language Models?

Researchers and companies worldwide are developing many other LLMs. Llama, ChatGPT, Mistral AI LLM, Falcon LLM, and similar models transform applications with natural language skills. LLMs are very useful for companies and groups that want to make communication and handling data easier.

Why is prompt engineering necessary?

Prompt engineering is required because it allows us to control the output of LLMs. LLMs can generate relevant, accurate, and even harmful outputs with careful prompting. By using practical, prompt engineering techniques, we can ensure that LLMs develop helpful, informative, and safe outputs.

How does prompt engineering work?

Prompt engineering provides LLMs with the information and instructions to generate the desired output. The prompt can be as simple as a single word or phrase or more complex and include examples, context, and other relevant information.

The LLM then uses the prompt to generate text. The LLM will try to understand the prompt’s meaning and develop text consistent with the prompt.

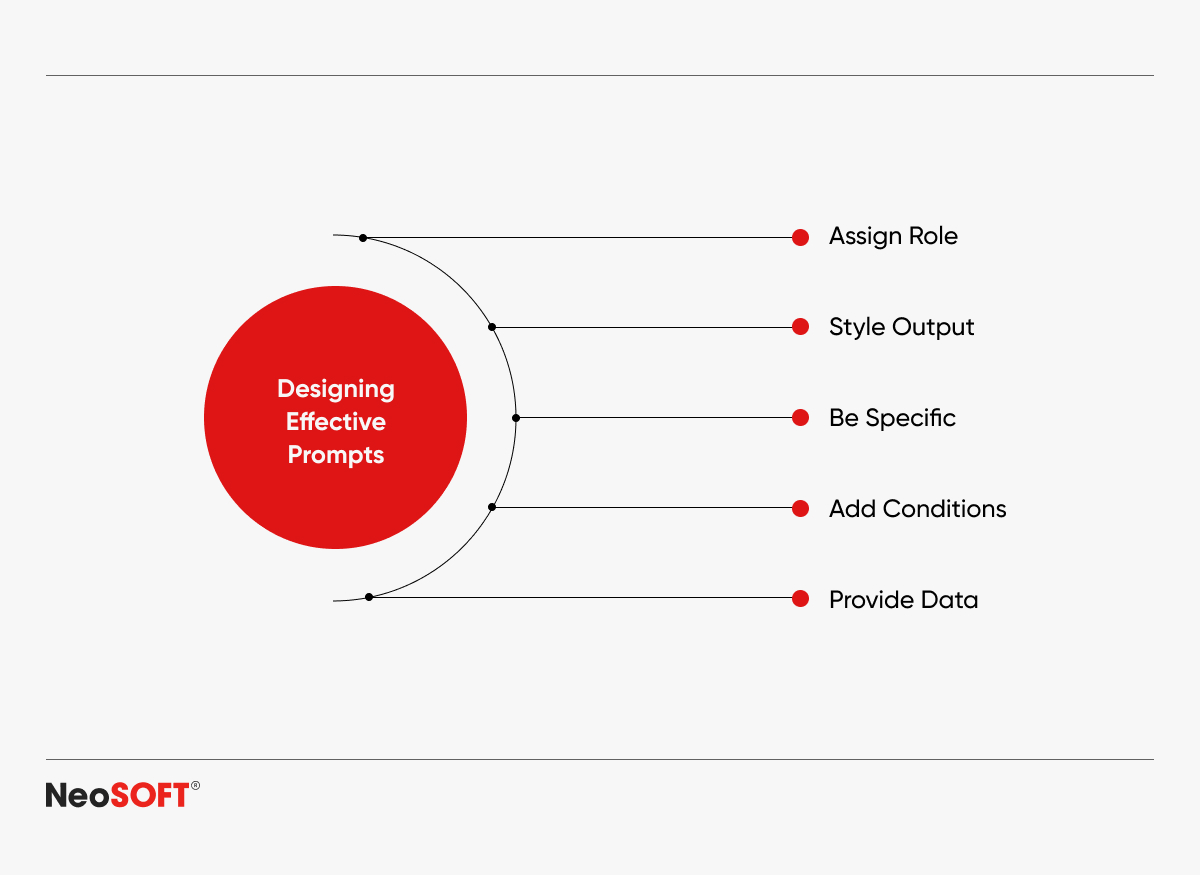

What are the best practices for prompt engineering?

The best practices for prompt engineering include the following:

- Set a clear objective. What do you want the LLM to generate? The more specific your objective, the better.

- Use concise, specific language. Avoid using vague or ambiguous language. Instead, use clear and direct instructions.

- Provide the LLM with all the necessary information to complete the task successfully, including examples, context, or other relevant information.

- Use different prompt styles to experiment and see what works best. There is no one-size-fits-all approach to fast engineering.

- Fine-tune the LLM with domain-specific data. If working on a specific task, you can fine-tune the LLM with domain-specific data to help the LLM generate more accurate and relevant outputs.

- Continuously optimize your prompts as you learn more about the LLM and its capabilities.

Examples of effective prompt engineering

Personality: “Creative Storyteller”

This prompt tells the LLM to generate text in a creative and engaging style.

One-shot learning: “Calculate Square Root: 34567 ➜ 185.92.”

This prompt tells the LLM to calculate the square root of 34567. The prompt includes an example output, which helps the LLM to understand the expected result.

Avoiding hallucinations: “Stay on Math Domain.”

This prompt tells the LLM to stay within the domain of mathematics when generating text. It helps avoid the LLM generating hallucinations, which are outputs that are factually incorrect or irrelevant to the task.

Avoiding harmful content: “Promote Non-Violent Text.”

This prompt tells the LLM to generate non-violent text that promotes peace.

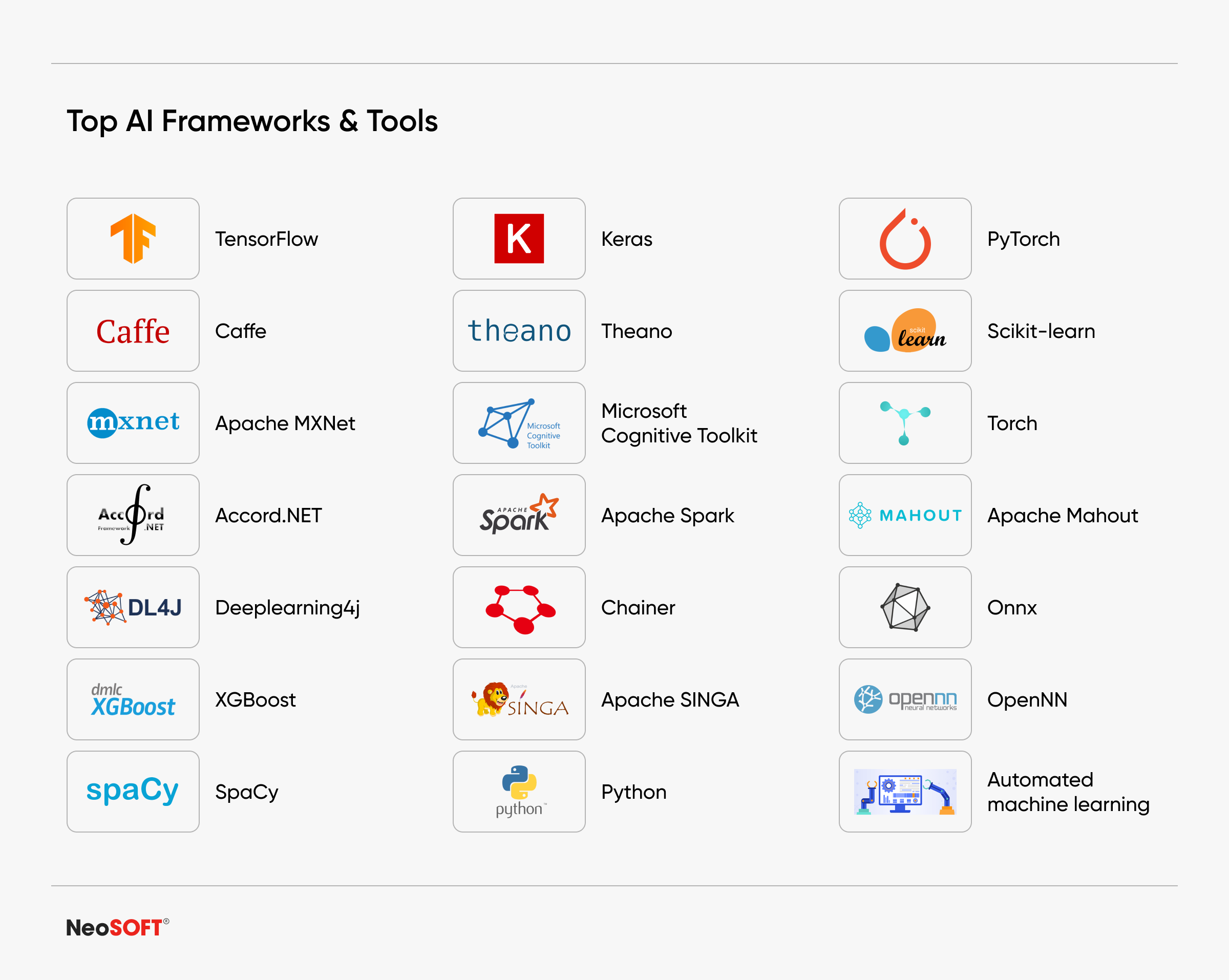

What are the tools and frameworks used for prompt engineering?

Several tools and frameworks are available to help with prompt engineering. Some of the most popular include

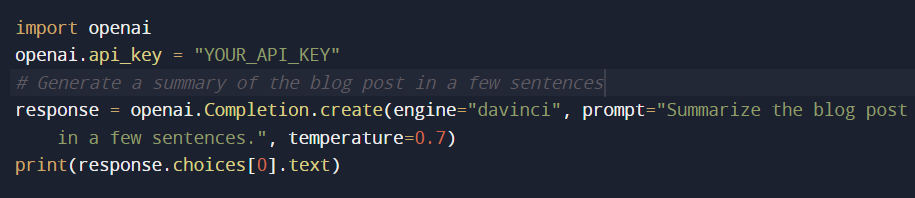

OpenAI Playground: A web-based tool that allows you to experiment with different prompt styles and see how they affect the output of LLMs.

PromptHub: A collection of prompts for various tasks, including code generation, translation, and creative writing.

PromptBase: A database of prompts for LLMs, including prompts for specific tasks and domains.

PromptCraft: A tool that helps you to design and evaluate prompts for LLMs.

In addition to these general-purpose tools, developers are designing several tools and frameworks for specific tasks or domains. For example, there are tools for prompt engineering for code generation, translation, and creative writing.

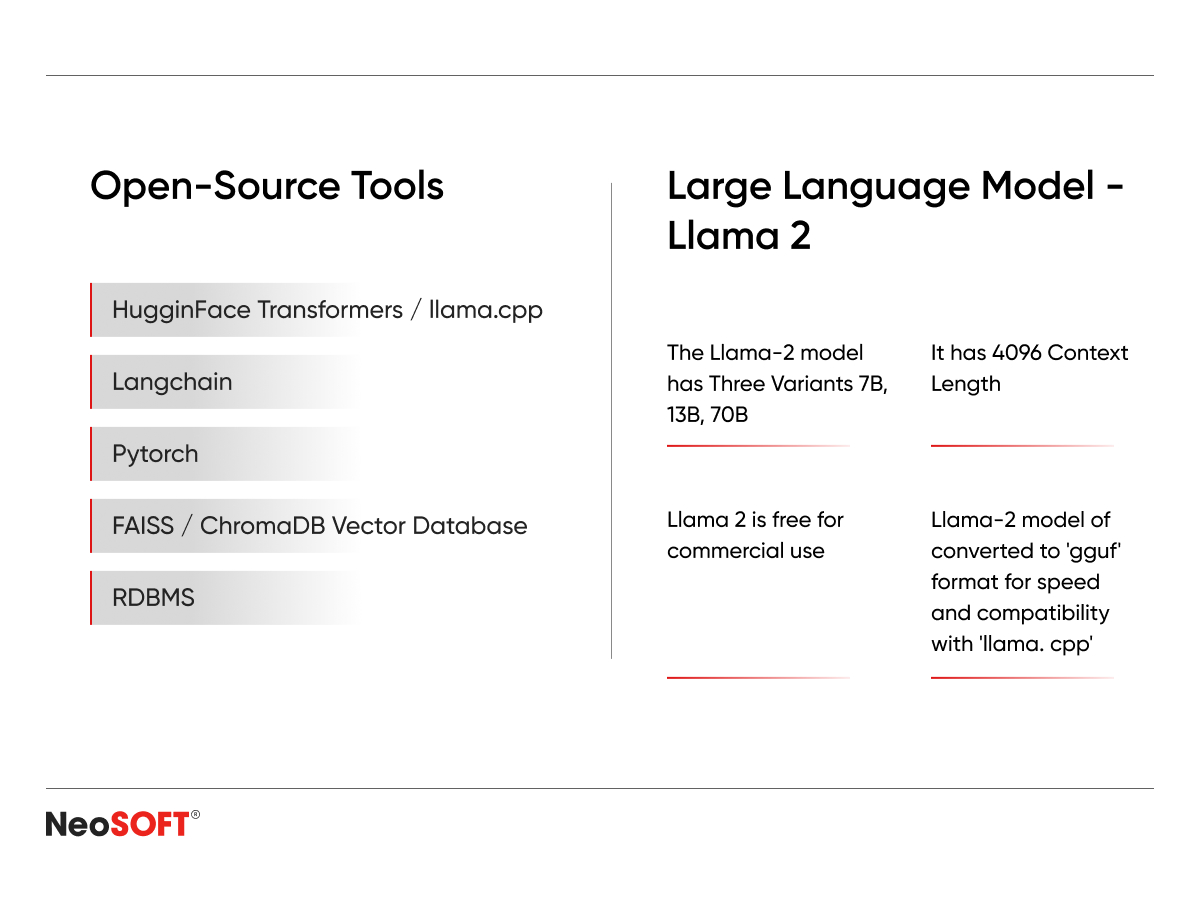

What are some examples of named tools and frameworks?

Here are some specific examples of prompt engineering:

Hugging Face Transformers: A Python library for natural language processing (NLP) and computer vision tasks that include tools for prompt engineering.

LangChain: Open-source Python library that makes building applications powered by large language models (LLMs) easier. It provides a comprehensive set of tools and abstractions for prompt engineering.

LaMDA Playground: A web-based tool that allows you to experiment with LaMDA, a large language model developed by Google AI.

Bard Playground: A web-based tool enabling you to experiment with Bard, a large language model developed by Google AI.

PromptCraft: A tool that helps you to design and evaluate prompts for LLMs.

PromptHub: A collection of prompts for various tasks, including code generation, translation, and creative writing.

PromptBase: A database of prompts for LLMs, including prompts for specific tasks and domains.

What are some real-life use cases of prompt engineering?

Prompt engineering drives the functionality of several real-world applications, such as:

- Content generation: LLMs generate content for websites, blogs, and social media platforms.

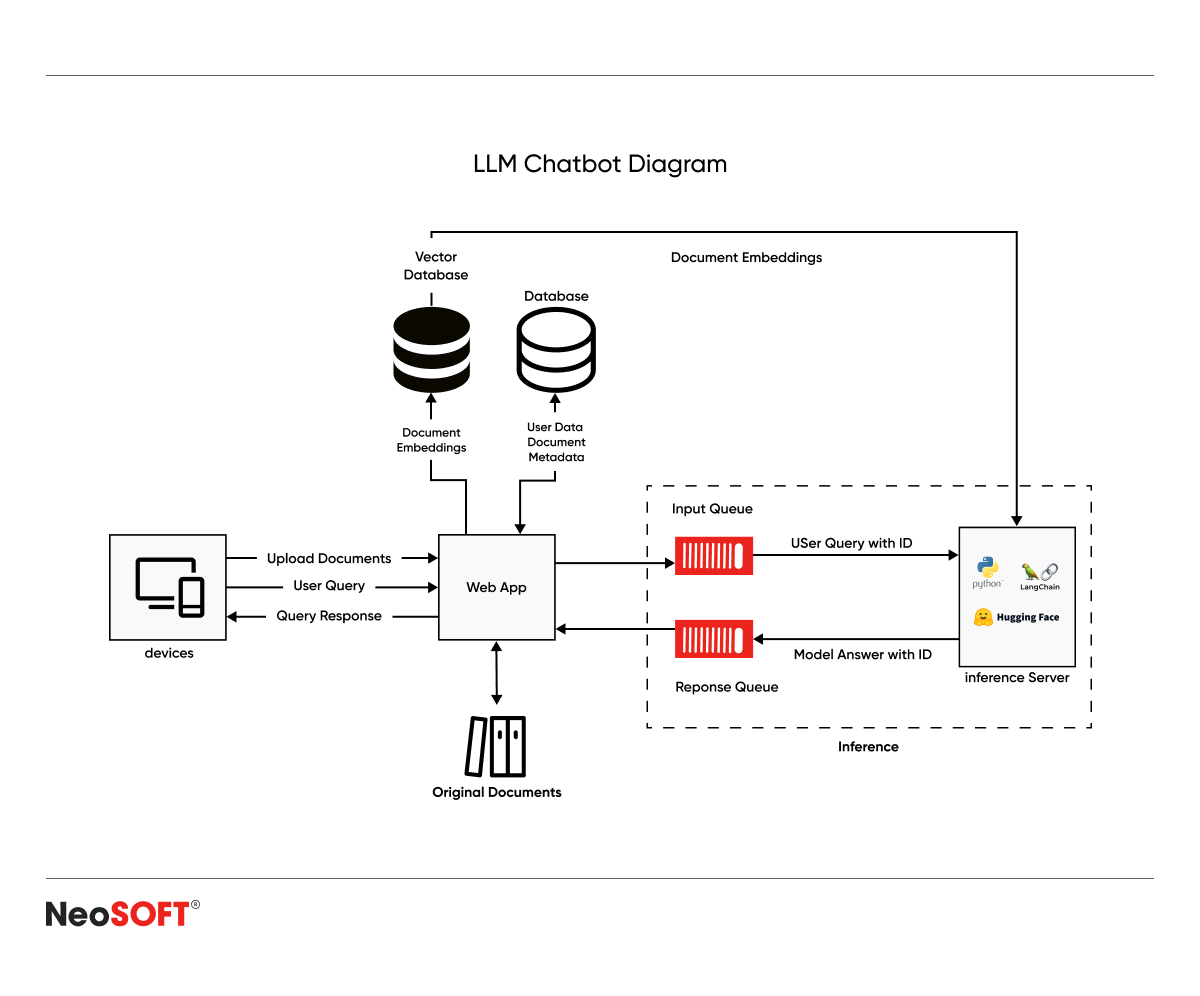

- Chatbots and virtual assistants: LLMs are employed to power applications like chatbots and virtual assistants, which provide customer support, answer questions, and book appointments.

- Data analysis and insights: LLMs can analyze and extract insights from large volumes of data sets.

- Language translation and localization: People use LLMs to translate text from one language to another and adapt content for various cultures.

- Customized recommendations: LLMs provide personalized user recommendations, such as products, movies, and music.

- Healthcare diagnostics: LLMs can inspect medical data, identify potential health issues, and play a significant role in pre-consultation, diagnosis, and treatment.

- Legal document analysis: LLMs analyze legal documents and identify critical information.

- Financial data interpretation: LLMs can interpret financial data and identify trends.

- Code generation and assistance: LLMs generate code and assist programmers.

The Future of Prompt Engineering

Prompt engineering is a rapidly evolving field that will become even more critical as LLMs become more powerful and versatile.

A key trend in prompt engineering involves creating new tools and techniques for making prompts better through machine learning. These advancements aim to automate the process of generating and assessing prompts, making it simpler and more efficient.

Another trend is the development of domain-specific prompts tailored to specific tasks or domains like healthcare, finance, or law.

Finally, there is a growing interest in developing prompts that can be used to generate creative content, such as poems, stories, and music.

As prompt engineering evolves, it will significantly impact how we interact with computers. For instance, it can lead to the creation of new types of user interfaces that are more intuitive and natural. Prompt engineering could also create new applications to help us be more productive and creative.

Overall, the future of prompt engineering is bright. Amidst LLMs’ expanding capabilities and flexibility, prompt engineering will take on an increasingly central role in enabling us to fully leverage the potential of these powerful tools.

By Rohan Dhere, AI Engineer