Applying AI in Development Projects

Artificial Intelligence (AI) has emerged as a game-changer in software development, revolutionizing how applications are built and enhancing their capabilities. From personalized recommendations to predictive analytics, AI has the power to transform traditional applications into intelligent systems that learn from data and adapt to user needs. This blog will explore the diverse facets of constructing Smart applications by integrating AI within development endeavours. We’ll delve into the various AI types, their advantages for software applications, and the efficient steps to infuse AI seamlessly into your development process.

What does AI in software development include?

AI in software development encompasses a variety of techniques and technologies that enable applications to mimic human intelligence. Machine Learning forms the foundational element of AI, allowing the applications to glean insights from data and make forecasts devoid of explicit programming instructions. Natural Language Processing (NLP) empowers applications to understand and interpret human language, giving rise to chatbots and virtual assistants.

On the other hand, Computer Vision allows applications to process and analyze visual data, enabling tasks like facial recognition and image classification. Deep Learning, a subset of ML, uses artificial neural networks to process vast amounts of complex data, contributing to advancements in speech recognition and autonomous vehicles.

What are the benefits of incorporating AI into development projects?

Integrating AI into development projects brings many benefits that enhance applications’ overall performance and user experience. Personalized Recommendations, enabled by AI algorithms that analyze user behaviour, lead to tailored content and product suggestions, significantly improving customer satisfaction and engagement. Automation is another key advantage, as AI-driven processes automate repetitive tasks, increasing efficiency and reducing human error. Leveraging AI models, Predictive Analytics empowers applications to anticipate forthcoming trends and results grounded in historical data, contributing to informed decision-making and strategic foresight.

How to prepare your development team for AI integration?

Before embarking on AI integration, preparing your development team for this transformative journey is essential. Assessing the AI skills and knowledge gap within the team helps identify areas for training and upskilling. Collaboration with data scientists and AI experts fosters cross-functional Learning and ensures a cohesive approach to AI integration. Understanding data requirements for AI models is crucial, as high-quality data forms the foundation of practical AI applications.

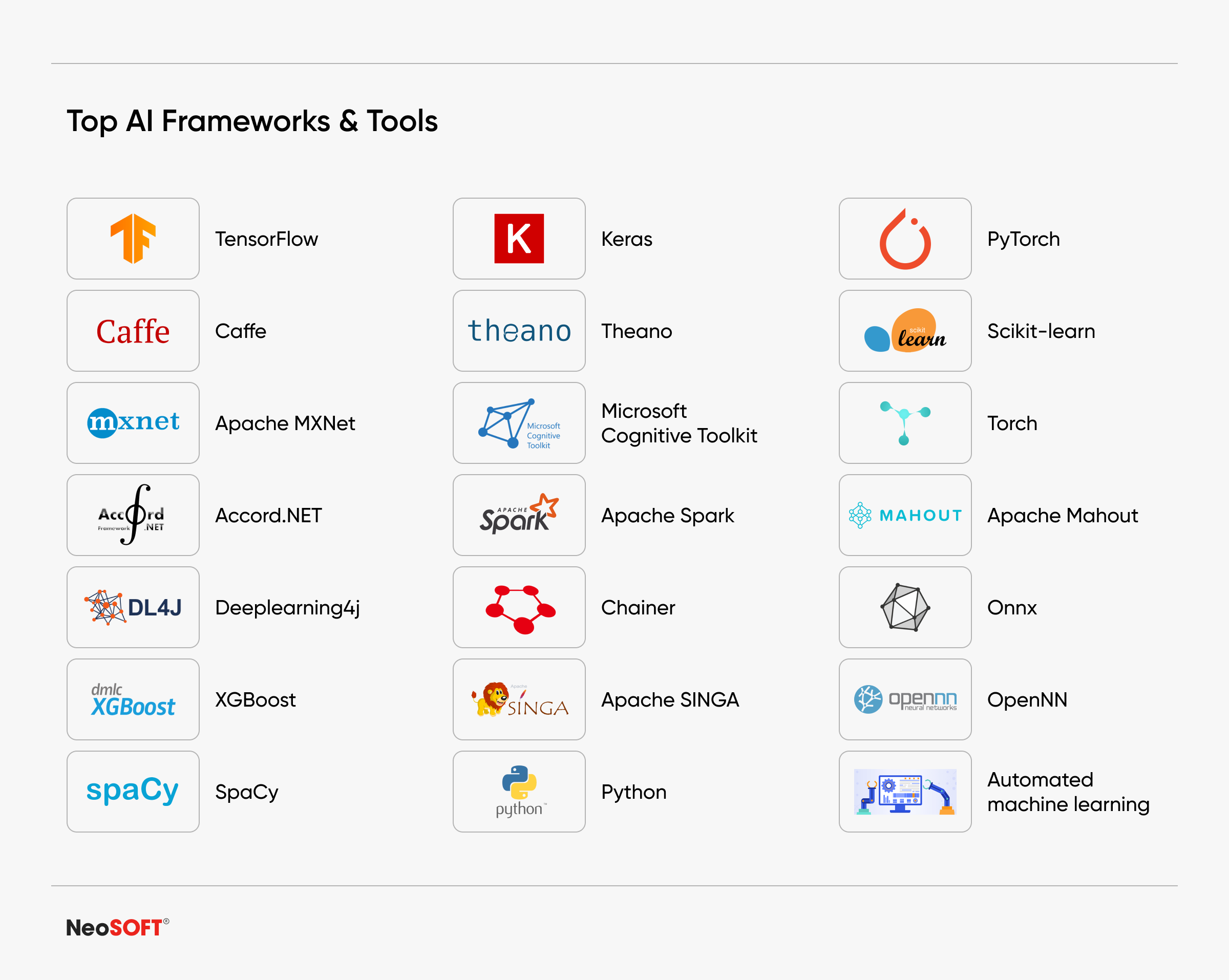

How to select the right AI frameworks and tools?

Choosing the appropriate AI frameworks and tools is paramount to successful AI integration. TensorFlow and PyTorch are popular AI frameworks for ML and deep learning tasks. Scikit-learn offers a rich set of tools for ML, while Keras provides a user-friendly interface for building neural networks. Selecting the proper framework depends on project requirements and team expertise. Additionally, developers should familiarize themselves with AI development tools like Jupyter Notebooks for prototyping and AI model deployment platforms for seamless integration.

What are AI models?

AI models are computational systems trained on data to perform tasks without explicit programming. They encompass a range of techniques, including supervised learning models for predictions, unsupervised learning for data analysis, reinforcement learning for decision-making, and specialized models like NLP and computer vision models. These models underpin many AI applications, from chatbots and recommendation systems to image recognition and autonomous vehicles, by leveraging patterns and knowledge learned from data.

What is the data collection and preprocessing for AI models?

Data collection and preprocessing are vital components of AI model development. High-quality data, representative of real-world scenarios, is essential for training AI models effectively. Proper data preprocessing techniques, including data cleaning and feature engineering, ensure the data is ready for AI training.

Addressing data privacy and security concerns is equally crucial, especially when dealing with sensitive user data.

What do developing AI models for your applications include?

Building AI models is a fundamental step in AI integration. Depending on the application’s specific requirements, developers can choose from various algorithms and techniques. Training AI models involves feeding them with the prepared data and fine-tuning them for optimal performance. Evaluating model performance using relevant metrics helps ensure that the AI models meet the desired accuracy and effectiveness, which helps boost the performance of your application.

Why is integrating AI models into your applications important?

Integrating AI models into applications requires careful consideration of the integration methods. Embedding AI models within the application code allows seamless interaction between the model and other components. Developers address real-time inference and deployment challenges to ensure that the AI models function efficiently in the production environment.

Why is testing and validation of AI integration crucial?

Rigorous testing and validation are critical for the success of AI-integrated applications. Unit testing ensures that individual AI components function correctly, while integration testing ensures that AI models work seamlessly with the rest of the application. Extensive testing helps identify and address issues or bugs before deploying the application to end users.

The journey of building intelligent applications continues after deployment. Continuous improvement is vital to AI integration, as AI models must adapt to changing data patterns and user behaviours.

Developers should emphasize constant Learning and updates to ensure that AI models remain relevant and accurate. Model monitoring is equally important to identify model drift and performance degradation. Developers can proactively address issues and retrain models by continuously monitoring AI model performance in the production environment.

Addressing ethical considerations in AI development

As AI integration becomes more prevalent, addressing ethical considerations is paramount. AI bias and fairness are critical areas of concern, as biased AI models can lead to discriminatory outcomes. Ensuring transparency and explainability of AI decisions is essential for building trust with users and stakeholders. It is critical to manage privacy and security issues about user data properly to protect user privacy and comply with applicable legislation.

Conclusion

In conclusion, building intelligent applications by incorporating AI into development projects opens up possibilities for creating innovative, efficient, and user-centric software solutions. By understanding the different types of AI, selecting the right frameworks and tools, and identifying suitable use cases, developers can harness the power of AI to deliver personalized experiences and predictive insights. Preparing the development team, integrating AI models seamlessly, and continuously improving and monitoring the models are crucial steps in creating successful AI-driven applications. Moreover, addressing ethical considerations ensures that AI applications are intelligent but also responsible and trustworthy. As AI technology advances, integrating AI into software development projects will undoubtedly shape the future of applications and pave the way for a more intelligent and connected world.